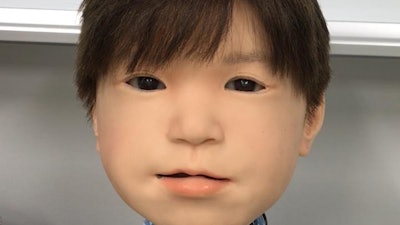

A Japanese research team reports that the next incarnation of their small humanoid robot will be able to produce a far broader range of facial expressions — creating a more human-like interaction.

Osaka University researchers first debuted their Affetto android in a 2011 paper. The robot was designed to have a the face of a child, but the technical and mathematical challenges of mimicking the vast array of human facial expressions left the original Affetto with something of a blank stare.

As they developed the second generation of the Affetto, however, roboticists measured three-dimensional movements at 116 “deformation units” — sites of a distinct facial movement — on the android’s face. Mathematical modeling was subsequently used to develop movement patterns.

Researchers then balanced the applied forces — and altered the robot’s synthetic skin — to be able to precisely control facial motions and “introduce more nuanced expressions, such as smiling and frowning."

"Android robot faces have persisted in being a black box problem,” Hisashi Ishihara, the report’s first author, said in a statement. “They have been implemented but have only been judged in vague and general terms.”

Researchers suggested their method could eventually help robots “have deeper interaction with humans,” which is likely to be increasingly important as robotics and artificial intelligence proliferate in daily life.